Article of the Month - January 2019

|

The Future of Authoritative

Geospatial Data in the Big Data World – Trends, Opportunities and

Challenges

Kevin

Mcdougall and Saman Koswatte, Australia

Kevin

Mcdougall and Saman Koswatte, Australia

This article in .pdf-format

(16 pages)

This paper was presented at the FIG Commission 3 meeting in Naples,

Italy and was chosen by commission 3 as paper of the month. This paper

examines the drivers of the “Big Data” phenomena and look to

identify how authoritative and big data may co-exist.

SUMMARY

The volume of data and its availability through the internet

is impacting us all. The traditional geospatial industries

and users have been early adopters of technology, initially

through the early development of geographic information systems

and more recently via information and communication technology

(ICT) advances in data sharing and the internet. Mobile

technology and the rapid adoption of social media applications

has further accelerated the accessibility, sharing and

distribution of all forms of data including geospatial data. The

popularity of crowd-sourced data (CSD) now provides users with a

high degree of information currency and availability but this

must also be balanced with a level of quality such as spatial

accuracy, reliability, credibility and relevance. National

and sub-national mapping agencies have traditionally been the

custodians of authoritative geospatial data, but the lack of

currency of some authoritative data sets has been questioned.

To this end, mapping agencies are transitioning from inwardly

focussed and closed agencies to outwardly looking and accessible

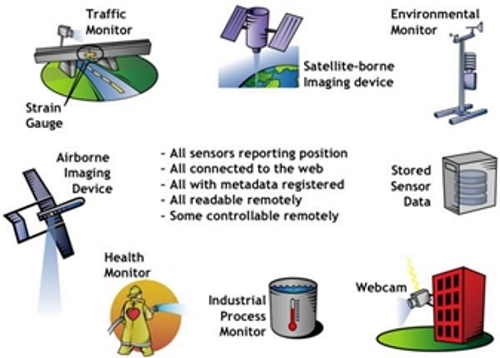

infrastructures of spatial data. The Internet of Things

(IoT) and the ability to inter-connect and link data provides

the opportunity to leverage the vast data, information and

knowledge sources across the globe. This paper will examine the

drivers of the “Big Data” phenomena and look to identify how

authoritative and big data may co-exist.

1. INTRODUCTION

There is no doubt that advances in technology is impacting

significantly across society. In particular, the

connectivity provided through advances in information and

communication technology (ICT) have enabled citizens, businesses

and governments to connect and exchange data with increasing

regularity and volume. With the exponential growth of data

sensors and their connectivity through the internet, the volume,

frequency and variety of data has changed dramatically in the

past decade. The connectivity of devices such as home

appliances, vehicles, surveillance cameras and environmental

sensors is often termed the Internet of things (IoT). In

addition, the capability of cloud computing platforms to store,

organise and distribute this data has created massive

inter-connected repositories of data that is commonly termed

“Big Data”.

In many instances, the digital exchange of data has replaced

direct human interaction through the automation of processes and

the use of artificial intelligence. The geospatial industries

and users have been early adopters of this technology, initially

through the early development of geographic information systems

(GIS) and more recently via ICT advances in data sharing and the

internet. Mobile technology and the rapid adoption of

social media and applications have further accelerated the

accessibility, sharing and distribution of all forms of data,

particularly geospatial data. Crowd-sourced data (CSD) now

enables users to collect and distribute information that has a

high degree of information currency. However, the availability

of CSD must also be balanced with an understanding of data

quality including spatial accuracy, reliability, credibility and

relevance.

The rate of change of technology has been extremely rapid

when considered in the context of the operations of traditional

geospatial mapping agencies. This paper will examine the

developments of the “Big Data” phenomena in the context of the

trends, opportunities and challenges in respect to the

traditional geospatial custodian and authoritative data

environments. The transition of mapping agencies from

inwardly focussed organisations to increasing outwardly looking

and accessible data infrastructures has been achieved in a

relatively short period of time. This has been

accomplished through not only the developments in ICT and

positioning technology, but through changes in government

policies and the realisation that existing systems were not

servicing the key stakeholders – the citizens.

This paper will firstly cover the progress of the digital

geospatial data repositories and their transition towards

spatial data infrastructures (SDIs). The development of more

open data frameworks and policies, and the rapid increase in the

quantity and variability of data, have created a number of

challenges and opportunities for mapping agencies. The

ability to inter-connect and link data has provided the

opportunity to leverage the vast data, information and knowledge

sources across the globe. However, the geospatial data

collection and management approaches of the past may not be

optimal in the current big data environment. Data veracity,

volume, accessibility and the rate of change means that new

approaches are required to understand, analyse, consume and

visualise geospatial data solutions. Data mining, data analytics

and artificial intelligence are now common practice within our

search engines, but how can these approaches be utilised to

improve the value and reliability of our authoritative data

sources?

2. Authoritative Geospatial Data and Spatial Data

Infrastructures

The term ‘authoritative geospatial data’ is used to describe a

data set that is officially recognized data that can be

certified and is provided by an authoritative source. An

authoritative source is an entity (usually a government agency)

that is given authority to manage or develop data for a

particular business purpose. Trusted data, or a trusted source,

is often a term associated with authoritative data, however, it

can also refer to a subsidiary source or subset of an

authoritative data set. The data may be considered to be trusted

if there is an official process for compiling the data to

produce the data subset or a new data set.

In most countries, authoritative geospatial data is generally

the responsibility of National Mapping Agencies (NMAs). Lower

levels of government such as state and local government may also

assume responsibility for the acquisition and maintenance of

authoritative geospatial data sets. Traditionally these data

sets have included foundational geospatial data themes that have

supported core government and business operations. In Australia

and New Zealand the Foundation Spatial Data Framework

(http://fsdf.org.au) identifies ten key authoritative geospatial

themes including:

- Street address for a home or business

- Administrative boundaries

- Geodetic framework

- Place names and gazetteer

- Cadastre and land parcels

- Water including rivers, stream, aquifers and lakes

- Imagery from satellite and airborne platforms

- Transport networks including roads, streets, highways,

railways, airports, ports

- Land use and land cover

- Elevation data including topography and depth

In addition to the foundational geospatial data detailed above,

other geospatial data sets may also be considered to be

authoritative by particular government agencies.

When exploring the evolution of SDIs, it can be seen that the

majority of SDIs have been led by national mapping agencies of

various countries (McDougall 2006) and have considered users as

passive recipients of this data (Budhathoki & Nedovic-Budic

2008). However, users are now active users of vast quantities of

data and can also potentially contribute towards the development

of SDI. In addressing this issue Budhathoki and Nedovic-Budic

(2008) suggest we should reconceptualise the role of the ‘user’

of spatial data infrastructures to be a ‘producer’ and to

include crowd-sourced spatial data in the SDI-related

processes.

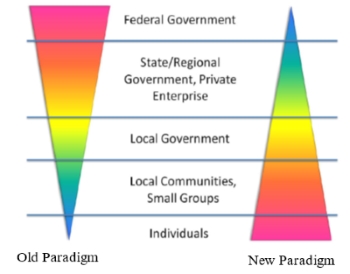

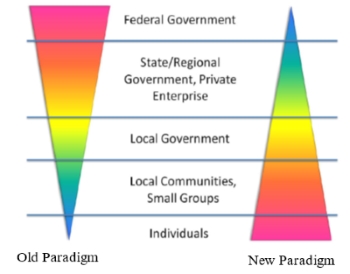

Traditionally, SDIs have a top down structure in which

organisations govern all the processes and the user generally

receives the final product. This is a mismatch with the new

concepts of the interactive web and the notion of crowd-sourced

data. Hence, Bishr and Kuhn (2007) suggest to invert the process

from top-down to bottom-up which clears the path for the next

generation SDIs. In the 1990s, the accepted spatial data model

was in pyramid style (Figure 1) which was based on government

data sources, but in more recent years, this pyramid is

increasingly inverted (Bishr & Kuhn 2007). Related Application

Programming Interfaces (APIs) can make SDIs more user-friendly

and therefore ‘it is likely that SDIs and data stores will need

to be retro-fashioned into API interrogation systems to ease the

integration of past and future data sets’ (Harris & Lafone

2012). Bakri and Fairbairn (2011) developed a semantic

similarity testing model for connecting user generated content

and formal data sources as connecting disparate data models

requires the generation of a common domain language.

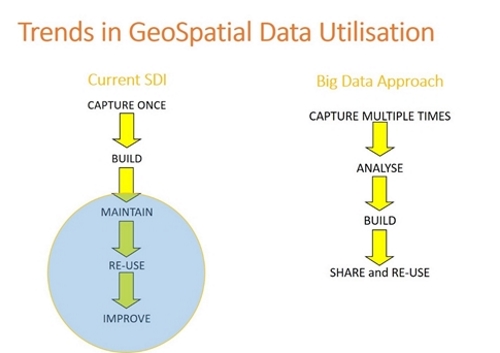

Figure 1: The spatial data sources old

and new paradigms (Harris and Lafone, 2012)

3. SDI and crowd-sourced geospatial data

A key issue with respect to SDIs is the maintenance of their

spatial data currency. There are thousands of SDIs throughout

the world from regional, state and local levels (Budhathoki &

Nedovic-Budic 2008). In the meantime, the seven billion humans

‘constantly moving about the planet collectively possess an

incredibly rich store of knowledge about the surface of the

earth and its properties’ (Goodchild 2007). The popularity of

using location sensor enabled (GNSS – Global Navigation

Satellite Systems) mobile devices along with interactive web

services like Google Maps or Open Street Maps (OSM), have

created marvellous platforms for citizens to engage in mapping

related activities (Elwood 2008). These platforms can

support and encourage crowd-sourced geospatial data.

The voluntarily engagement of creating geographic information

is not new (Goodchild 2007). However, researchers are still

struggling to figure out the motivation of volunteers to

generate geographic related information. Additionally,

sensor-enabled devices ‘can collect data and report phenomena

more easily and cheaply than through official sources’

(Diaz et al. 2012). The information infrastructures, via the

IoTs, and easily accessible positioning devices (GNSS) has

enabled ‘users from many differing and diverse backgrounds’

(McDougall, 2009) to ‘share and learn from their

experiences through text (blogs), photos (Flickr, Picasa,

Panoramio) and maps (GoogleMaps, GoogleEarth, OSM) not only

seeking but also providing information’ (Spinsanti & Ostermann

2010).

SDIs are generally considered as more formal infrastructures,

being highly institutionalised and having more traditional

architectures. In line with the SDI framework, each dataset

usually undergoes thorough standardisation procedures and SDI

data is generally handled by skilled and qualified people.

Therefore, the cost of creation and management is high. SDIs are

mainly held by governments and are mostly standards centric as

this is important for structuring and communicating data. The

standardisation is by means of structure (syntax) as well as

meaning (semantic) (Hart & Dolbear 2013). Generally, the

crowd-sourced geospatial data comes from citizens and hence the

information is often unstructured, improperly documented and

loosely coupled with metadata. However, crowd generated

geospatial data is more current and diverse in contrast to SDI.

Jackson et al. (2010) studied the synergistic use of

authoritative government and crowd-sourced data for emergency

response. They critically compared the clash of two paradigms of

crowd-sourced data and authoritative data as identified in Table

1.

Table1: A comparison of two paradigms: Crowd-sourcing and

Authoritative Data (Jackson et al., 2010)

| Crowd-sourcing

|

|

Authoritative

Government Data |

| ‘Simple’ consumer

driven Web services for data collection and processing.

|

Vs. |

‘Complex’ institutional survey and GIS applications.

|

|

Near ‘real-time’ data collection and continuing data

input allowing trend analysis.

|

Vs. |

‘Historic’ and ‘snap-shot’ map data.

|

|

Free ‘un-calibrated’ data but often at high

resolution and up-to-the minute.

|

Vs. |

Quality assured ‘expensive’ data. |

|

‘Unstructured’ and mass consumer driven metadata and

mashups.

|

Vs. |

‘Structured’ and

institutional metadata in defined but often rigid

ontologies. |

|

Unconstrained capture and distribution of spatial data

from ‘ubiquitous’ mobile devices with high resolution

cameras and positioning capabilities.

|

Vs. |

‘Controlled’ licensing, access policies and digital

rights.

|

|

Non-systematic and incomplete coverage.

|

|

Systematic and comprehensive coverage.

|

As can be noted in this table the two forms of data may seem

as if they are diametrically opposed.

Often a major deficiency with authoritative data held in SDIs is

their lack of currency which is often the result of the time

consuming data collection, checking and management by government

agencies. Processes to capture, compile, generate and

update authoritative geospatial data have been developed by

agencies over long periods of time and were commonly aligned to

traditional map production operations. Although technologies

have dramatically improved the performance and processes to

update many of the data themes, bottlenecks still exist in a

number of the processes. These bottlenecks have been

further exacerbated through the downsizing of mapping

authorities as many of the existing production processes are

either no longer required or can be more efficiently undertaken

by commercial organisations. The reluctance of mapping

agencies to use crowd-sourced data is slowly changing and many

organisations are now adapting their processes to use current

data user generated data to improve their authoritative data

sets.

4. Big Geospatial Data

Geospatial data has always been considered to have more

complex and larger data sets relative to many other

applications. The graphical and visual context of

geospatial data, particularly imagery, has to some extent,

prepared surveyors and geospatial professionals for the data

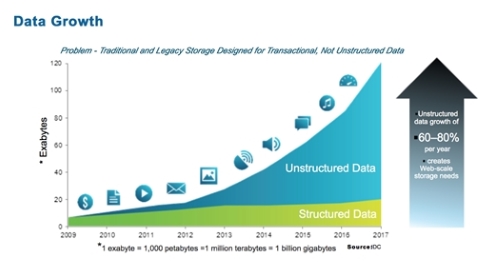

centric future that now exists. The term “big data” was coined

over 20 years ago although it has only developed a much clearer

focus in the past decade as a result of technological

developments, increased use of sensors and the proliferation of

mobile communication technologies. Li et al. (2016)

identified that big data can be classified as either

unstructured or structured data sets with massive volumes that

cannot be easily captured, stored, manipulated, analysed,

managed or presented by traditional hardware, software and

database technologies.

Big data characteristics have been described via a range of

characteristics which seek to differentiate it from other forms

of data (Evans et al. 2014; Li et al. 2016). The six Vs provide

a useful starting position in understanding the challenges that

geospatial scientists, surveyors and users are facing when big

geospatial data is considered (Li et al. 2016). These

characteristics include:

- Volume

- Variety

- Velocity

- Veracity

- Visualisation, and

- Visibility

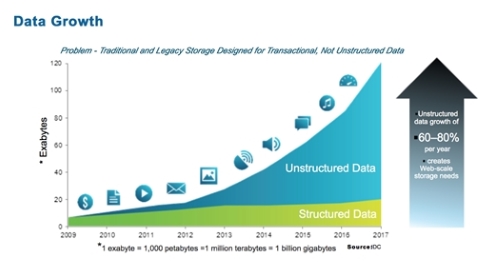

The volume of data is perhaps the most obvious characteristic

of big data. Large geospatial data sets that are now commonly

acquired and used in the form of remotely sensed imagery, point

cloud data, location sensors and social media are creating data

in the petabytes. The data sets are not only of a much

higher resolution but they are also being captured more

regularly by more sensors. This presents not only challenges

with storage but challenges with integrating, resourcing and

analysing the data.

Figure 2: Big data

growth has been exponential (Source: www.dlctt.com)

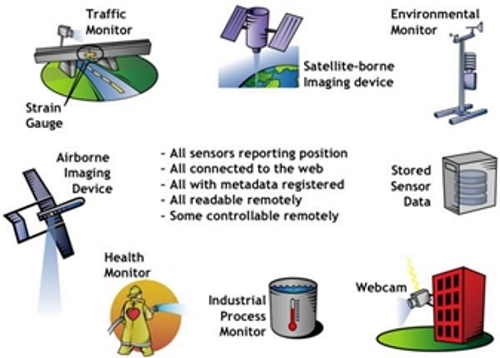

The variety of data is also ever increasing and changing.

Data is being captured in traditional vector and raster formats

as well as point clouds, text, geo-tagged imagery, building

information models (BIM) and sensors of various forms.

With the variety of data comes a variety of data formats, some

structured and many unstructured, which further complicates the

analysis of the data. Many data sets have proprietary formats

that do not readily comply with data exchange protocols. The

recent data breaches by a number of large corporations has

highlighted the need to also remove personal identifiers that

are often incorporated within these data sets.

Figure 3: Increasing variety of sensors

(Source: www.opengeospatial.org)

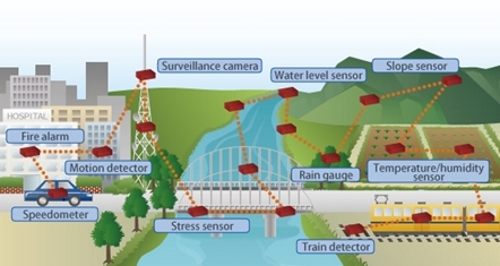

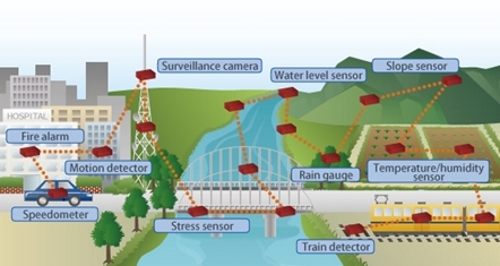

The rate of capture or

velocity of data collection is directly related to the number of

sensors and their capability to collect data more frequently,

the ability to transmit data through wider data bandwidth, and

increased levels on connectivity through the internet. User

expectations and behaviour are also driving increased demand for

real-time data, particularly the social media platforms.

Figure 4: Increasing number and rate of

data capture from sensor networks

(Source: Fujitsu Intelligent Society Solution – Smart Grid

Communications)

The veracity or quality of geospatial data varies

dramatically. Many large geospatial data sets acquired

from sources such as satellite imagery generally have well known

data quality characteristics. However, there are many sources of

data where the quality is unknown or where the data provided on

quality may not be accurate. This continues to be a major

challenge in utilising the emerging data sources with

traditional or authoritative data sources. Research

continues on approaches and methods to validate the quality of

data to ensure it is “fit for purpose”.

Geospatial data is multi-dimensional and has traditionally

been presented as charts, tables, drawings, maps and imagery.

The ability to reorganise data using a geospatial framework

provides a powerful tool for decision makers to interpret

complex data quickly through visualisation tools such as 3D

models, animations and change detection.

Figure 5: Pattern

analysis (Source: www.hexagongeospatial.com)

The improved inter-connectivity of sensors and the

repositories where the data is stored has now enabled data to be

readily integrated like never before. Personal and closed

repositories are now being replaced by cloud storage technology

which allows not only secure storage but also the ability to

make the data discoverable and visible to other users. Sharing

of data with specific individuals or everyone is now possible

and easily achieved.

5. Trends and Drivers in Big Geospatial Data Growth

Many of the current systems and institutional processes for

managing geospatial data were not designed for the current

dynamic and demanding information environment. The focus

for national and sub-national mapping agencies has been to

provide reliable and trusted data for their primary business or

to meet legislative requirements. These environments are

generally restrictive in their data sharing arrangements and

most data is held within institutional repositories within

government agencies, which often brings a high degree of

institutional inertia. Due to the time and costs to

implement new data models and strategies, these organisations

are also often slow to respond to new information management

approaches and conservative in their data sharing due to

government restrictions and legislation.

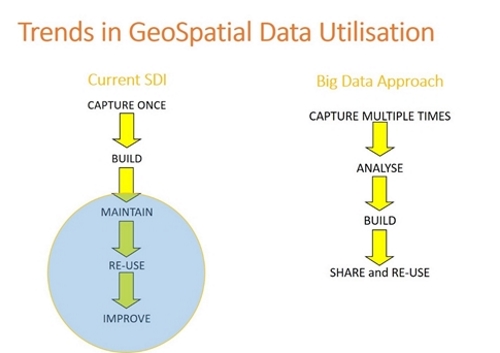

The development of spatial data infrastructures has followed

the approach of capturing the data once and then using the data

many times. The continual improvement in spatial data

infrastructures has relied on the fact that many of the

authoritative data sets will remain largely stable in their

format, structures and business needs. This has enabled

these data sets to be continually maintained and improved using

traditional approaches but may not encourage innovation or

change.

However, the big data approaches are now challenging this

paradigm (Figure 6). The continuous capture and re-capture

of data from multiple sensors has provided new opportunities and

approaches to be considered. Integrating and analysing

multiple data sets has allowed new data sets to be built and

customised for the users. Being able to share and re-share

this data through multiple platforms including social media has

created expectations that all data should be available in a

user-friendly and timely manner

Figure 6: Changing trends in geospatial

data utilisation

The drivers for growth in geospatial data demand and

utilisation are due to a number reasons including:

- Advances in information technology and communication

- Smartphone technology

- New sensor technologies, and

- Mobile user applications and business opportunities

The improvement in the communication technologies and data

infrastructure has been a fundamental driver for increased

growth and utilisation. High quality broadband

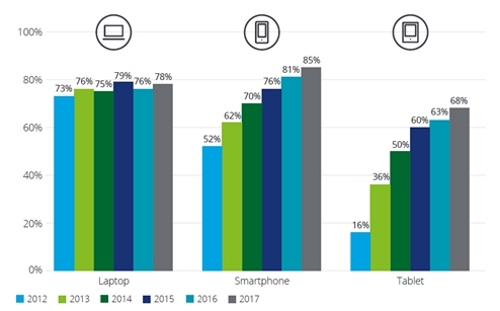

infrastructure and high speed mobile phone and data technologies

has enabled developing, emerging and developed societies to

rapidly utilise the new mobile technologies. Smartphones

and tablets are now the technology of choice for accessing and

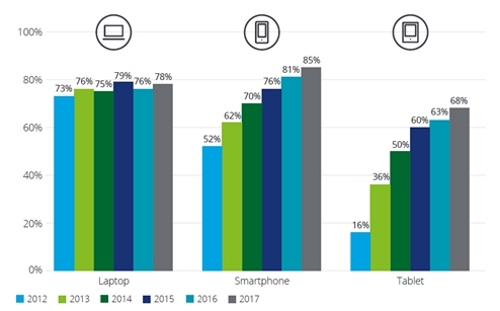

communicating data for the majority of users. Just as the growth

in laptop devices recently overtook the growth in desktop

computers, smartphone utilisation by adults in the UK has now

overtaken the utilisation of laptops (Figure 7). This trend

continues to support the preference for mobile technology, not

just with teenagers but also by adults. Smartphones and tablet

technologies now provide the benefit of mobility with the data

capabilities of many laptops and desktops through the mobile

applications and tools for both business and private usage.

Figure 7: Smartphone, laptop and tablet

penetration among UK adults, 2012-17

(Source: Deloitte Mobile Consumer Survey, 2017)

The dramatic impact that has been made on society by the

mobile phone technology and data communication has been

supported by the development of mobile software applications.

These applications range from simple web search tools and

utilities, to complex business tools and social media. Social

media applications such as Twitter, Facebook and Instagram now

have billions of users and followers generating massive volumes

of data including text messages, images and videos. The

connectivity to other data sources via the internet has created

an incredible network of data linkages and forms the foundation

of the business model for these platforms to monetise their user

interactions.

6. Challenges and Opportunities to Link Big Data and

Authoritative Geospatial Data

An enormous amount of data is now created and utilised, not

only by commercial organisations and governments, but also by

the billions of individual users on ICT technologies. In recent

times, there has been an increased interest in the use of big

data and crowd-sourced data (CSD) for both research and

commercial applications. Volunteered Geographic Information

(VGI) (Goodchild 2007), with its geographic context, can be

considered a subset of Crowd-sourced Data (CSD) (Goodchild

& Glennon 2010; Heipke 2010; Howe 2006; Koswatte et al. 2016).

VGI production and use have also become simpler than ever before

with technological developments in mobile communication,

positioning technologies, smart phone applications and other

infrastructure developments which support easy to use mobile

applications.

However, data quality issues such as credibility, relevance,

reliability, data structures, incomplete location information,

missing metadata and validity continue to be one of big data’s

major challenges and can limit its usage and potential benefits

(De Longueville et al. 2010; Flanagin & Metzger 2008; Koswatte

et al. 2016). Research in extracting useful geospatial

information from large social media data sets to support

disaster management (de Albuquerque et al. 2015; Koswatte et al.

2015) and the update of authoritative sets from other

non-authoritative data such as Open Street Maps (Zhang et al.

2018) has identified the potential of data geospatial data

analytics.

Data analytics and machine learning are already widely

utilised in the analysis of large volumes of data collected by

the search engine and social media companies. Similarly,

opportunities exist within areas of big geospatial data where

improvements in the quality and currency of existing

authoritative data sets could be achieved. Although

authoritative geospatial data is generally of a very high

quality, errors in quality geospatial data sets are not

uncommon. For example, in the early data versions of

Google Maps, much of the data was originally acquired from

authoritative geospatial sources. However, the data was not

originally designed for navigational purposes and therefore some

roads that existed as roads in a database were not yet built and

hence could not be used for navigation. As figure 8 (a)

illustrates, the 2009 version of Google Maps of an area in

Queensland, Australia, provided incorrect navigation data that

would have directed the driver through a farm and into a river.

However, nine years later, and with improved data provided

through geospatial data analytics, the correct navigational

route has been identified (figure 8b).

|

|

Figure 8: (a) 2009 incorrect directions Google Maps

directions with road passing through a river and (b) 2018

updated and correct directions based on driver information

One of the major challenges for mapping agencies is to

identify changes in their data themes when new development or

changes occur, for example if a new property is constructed or

changes to a building footprint is undertaken. In these

instances, it would be beneficial to periodically analyse high

resolution satellite imagery to identify these temporal changes

to alert the data editors that potential changes have occurred.

Geospatial data analytics of big data can facilitate this change

detection through temporal analysis of high resolution imagery

at regular intervals.

Artificial intelligence (AI) can encompass a range of

approaches that can lead to the automated analysis of large data

sets. Rules based approaches provide a framework for analysing

data based on defined requirements or rules. These approaches

may be suitable for analysing geospatial data held in regulatory

environments where certain conditions are required for

compliance. For example, minimum distance clearances or offsets

from the boundaries of a property may be required under planning

regulations. On the other hand, machine learning analyses data

to identify patterns, learns from these patterns and then can

self-improve once the system is trained. It is used widely in

analysing patterns of internet searching and social media which

can help predict the needs and preferences of users in order to

better customise searches and also market products. A hybrid

approach that draws on a combination of machine learning and

existing rule sets, such as geographic placename gazetteers, may

provide a suitable platform for improving geospatial databases.

Importantly, to integrate disparate data sets and models,

special domain terminology and language needs to be established

through the development of geospatial semantics and ontologies.

Finally, the improvements in the locational accuracy of

mobile sensors, particularly smartphones, will provide

opportunities to improve the quality of a range of geospatial

data sets. The recent release of a dual frequency GNSS chipset

for smartphones now provides the opportunity to capture improved

2D and 3D positional data. Potential applications may include

the collection of positional data for transport or

infrastructure that may improve the locational accuracy of low

spatial quality data sets. The improved 3D accuracy of the

spatial location of devices may provide better information to

support emergency services in cases of search and rescue.

7. Conclusions

We are now living in a highly data driven and data centric

society where the expectations of the data users are ever

increasing. The geospatial industry has continued to lead

in the development of innovative solutions that provide improved

outcomes for citizens and communities. The big data environment

presents a range of challenges for the custodians of

authoritative geospatial data sets and opportunities for

industries that are seeking to embrace the new big data

opportunities.

References

Bakri, A & Fairbairn, D 2011, 'User Generated Content and

Formal Data Sources for Integrating Geospatial Data', in

Proceedings of the 25th International Cartographic Conference:

proceedings of theProceedings of the 25th International

Cartographic Conference, A Ruas (ed.), Paris, France.

Bishr, M & Kuhn, W 2007, 'Geospatial information bottom-up: A

matter of trust and semantics', in S Fabrikant & M Wachowicz

(eds), The European information society: Leading the way with

geo-information, Springer, Berlin, pp. 365-87.

Budhathoki, NR & Nedovic-Budic, Z 2008, 'Reconceptualizing

the role of the user of spatial data infrastructure',

GeoJournal, vol. 72, no. 3-4, pp. 149-60.

de Albuquerque, JP, Herfort, B, Brenning, A & Zipf, A 2015,

'A geographic approach for combining social media and

authoritative data towards identifying useful information for

disaster management', International Journal of Geographical

Information Science, vol. 29, no. 4, pp. 667-89.

De Longueville, B, Ostlander, N & Keskitalo, C 2010,

'Addressing vagueness in Volunteered Geographic Information

(VGI)–A case study', International Journal of Spatial Data

Infrastructures Research, vol. 5, pp. 1725-0463.

Diaz, L, Remke, A, Kauppinien, T, Degbelo, A, Foerster, T,

Stasch, C, Rieke, M, Baranski, B, Broring, A & Wytzisk, A 2012,

'Future SDI – Impulses from Geoinformatics Research and IT

Trends', International Journal of Spatial Data Infrastructures

Research, vol. 07, pp. 378-410.

Elwood, S 2008, 'Volunteered geographic information: future

research directions motivated by critical, participatory, and

feminist GIS', GeoJournal, vol. 72, no. 3-4, pp. 173-83.

Evans, MR, Oliver, D, Zhou, X & Shekhar, S 2014, 'Spatial Big

Data: Case Studies on Volume, Velocity, and Variety', in HA

Karimi (ed.), Big Data: Techniques and Tecnologies in

Geoinformatics, CRC Press, pp. 149-76.

Flanagin, AJ & Metzger, MJ 2008, 'The credibility of

volunteered geographic information', GeoJournal, vol. 72, no.

3-4, pp. 137-48.

Goodchild, MF 2007, 'Citizens as sensors: the world of

volunteered geography', GeoJournal, vol. 69, no. 4, pp. 211-21.

Goodchild, MF & Glennon, JA 2010, 'Crowdsourcing geographic

information for disaster response: a research frontier',

International Journal of Digital Earth, vol. 3, no. 3, pp.

231-41.

Harris, TM & Lafone, HF 2012, 'Toward an informal Spatial

Data Infrastructure: Voluntary Geographic Information,

Neogeography, and the role of citizen sensors', in K Cerbova & O

Cerba (eds), SDI, Communities and Social Media, Czech Centre for

Science and Society, Prague, Czech Republic, pp. 8-21.

Hart, G & Dolbear, C 2013, Linked Data: A Geographic

Perspective, CRC Press, NW, USA.

Heipke, C 2010, 'Crowdsourcing geospatial data', ISPRS

Journal of Photogrammetry and Remote Sensing, vol. 65, no. 6,

pp. 550-7.

Howe, J 2006, 'The rise of crowdsourcing', Wired magazine,

vol. 14, no. 6, June 2006, pp. 1-4.

Jackson, M, Rahemtulla, H & Morley, J 2010, 'The synergistic

use of authenticated and crowd-sourced data for emergency

response', in Proceedings of the 2nd International Workshop on

Validation of Geo-Information Products for Crisis Management

(VAL-gEO): proceedings of theProceedings of the 2nd

International Workshop on Validation of Geo-Information Products

for Crisis Management (VAL-gEO) Ispra, Italy.

Koswatte, S, McDougall, K & Liu, X 2015, 'SDI and

crowdsourced spatial information management automation for

disaster management', Survey Review, vol. 47, no. 344, pp.

307-15.

Koswatte, S, McDougall, K & Liu, X 2016, 'Semantic Location

Extraction from Crowdsourced Data', ISPRS-International Archives

of the Photogrammetry, Remote Sensing and Spatial Information

Sciences, pp. 543-7.

Li, S, Dragicevic, S, Castro, F, Sester, M, Winter, S,

Coltekin, A, Pettit, C, Jiang, B, Haworth, J, Stein, A & Cheng,

T 2016, 'Geospatial big data handling theory and methods: A

review and research challenges', ISPRS Journal of Photogrammetry

and Remote Sensing, vol. 115, pp. 119-33.

McDougall, K 2006, 'A Local-State Government Spatial Data

Sharing Partnership Model to Facilitate SDI Development', The

University of Melbourne, Melbourne.

Spinsanti, L & Ostermann, F 2010, 'Validation and relevance

assessment of volunteered geographic information in the case of

forest fires', in Proceedings of Validation of geo-information

products for crisis management workshop (ValGeo 2010):

proceedings of theProceedings of Validation of geo-information

products for crisis management workshop (ValGeo 2010) JRC Ispra.

Zhang, X, Yin, W, Yang, M, Ai, T & Stoter, J 2018, 'Updating

authoritative spatial data from timely sources: A multiple

representation approach', International Journal of Applied Earth

Observation and Geoinformation, vol. 72, pp. 42-56.

Bibliographical notes

Professor Kevin McDougall is currently the Head of the School

of Civil Engineering and Surveying at the University of Southern

Queensland (USQ). He holds a Bachelor of Surveying (First

Class Honours) and Master of Surveying and Mapping Science from

the University of Queensland, and a PhD from the University of

Melbourne. Prior to his current position Kevin has held

appointments as Deputy Dean, Associate Dean (Academic) and the

Head of Department of Surveying and Spatial Science at USQ. He

has served on a range of industry bodies and positions including

the Queensland Board of Surveyors, President of the

Australasian Spatial Information Education and Research

Association (ASIERA) from 2002-2008 and the Board of Trustees

for the Queensland Surveying Education Foundation. Kevin

is a Fellow of Spatial Sciences Institute and a Member of The

Institution of Surveyors Australia.

Dr Saman Koswatte

obtained his PhD from the School of Civil Engineering

and Surveying, Faculty of Health Engineering and Science, University of

Southern Queensland, Australia. He is undertakes research in the fields

of Crowdsoursed geospatial data, Geospatial Semantics and Spatial Data

Infrastructures. He is holding MPhil degree in Remote Sensing and GIS

from University of Peradeniya, Sri Lanka and a BSc degrees in Surveying

Sciences from Sabaragamuwa University of Sri Lanka. He is a Senior

Lecturer in the Faculty of Geomatics, Sabaragmauwa University of Sri

Lanka and has over ten years of undergraduate teaching experience in the

field of Geomatics

Contacts

Professor Kevin McDougall

School of Civil Engineering and Surveying

University of Southern Queensland

West Street, Toowoomba Qld 4350, AUSTRALIA

Tel. +617 4631 2545

Email: mcdougak[at]usq.edu.au

Dr Saman Koswatte

Department of Remote Sensing and GIS

Faculty of Geomatics,

Sabaragamuwa University of Sri Lanka,

P.O. Box 02, Belihuloya, 70140, SRI LANKA

Tel. +944 5345 3019

Email: sam[at]geo.sab.ac.lk